Hello there! This week we switch domains and take our first leap into the exciting world of Reinforcement learning (RL) by diving into DeepMinds’ ground breaking classic “Playing Atari with Deep Reinforcement Learning” (2013). It was the first successfull application of Deep Learning to RL and outperformed SOTA methods and partly even humans at a selection of ATARI games.

Playing Atari with Deep Reinforcement Learning

📍 Background. After the initial success of deep neural networks, especially convolutional neural networks in Computer Vision problems, this paper was the first to demonstrate their applicability to reinforcement learning. The model - a deep Q Network - learns purely from pixel input to play seven Atari games and outperforms even baselines that utilize heavily hand-crafted and fine-tuned features. This paper set the basis for the entire field of deep reinforcement learning and established DeepMind as one of the leading AI companies in the world.

🤖 What’s reinforcement learning? RL is a machine learning training method based on rewarding desired behaviors and/or punishing undesired ones. A typical setting consists of an agent interacting with an environment. The agent can perform actions to influence the environment and receives a state and a reward (indicating if the action was good or bad) in return. The central task is then to find actions that maximize the total reward the agent can obtain. One way to learn how to choose actions is Q-Learning.

🇶 Deep Q-Learning.

Q-Learning. Q-Learning attempts to learn optimal actions by defining a function Q(s,a) = R that approximates the total reward R a player can obtain until the end of the game given a current state s and action a. When the Q function is modeled as a deep neural network (here a CNN) the approach is called Deep Q-Learning.

Data. As every other neural net we need data to train the model. In the case of RL this can be obtained on the fly (online learning), meaning that the data is generated by playing while the model is trained and stored in a buffer. This buffer consists of transitions (s,a,r,s’) that capture how an action a in a state s leads to an immediate reward r and subsequent state s’.

Optimization. To optimize the model we need to define a loss function that instructs the network how to change in order to better approximate our desired Q-function. We can obtain a Loss function by decomposing our Q-function. By definition the following equation holds:

Q(s,a) = R = r + Q(s’,a’).

In words: The Q value (output of the Q function) for the current state should be the same as the immediate reward r (we get for performing a in s) plus the Q value for the next state s’. We can use this fact and define Q(s,a) as our prediciton and r + Q(s’,a’) as our ground truth label.

Adapted Q function. By default the Q function takes in a state-action pair and outputs a single Q value. When applying this to a deep neural network, it would require us to run an inference for every possible action in a state. This scales linearly with the number of available actions and quickly becomes computationally expensive. A more efficent way to model this, is to merge it into a single model by adapting the Q function to take in a state and output a list of action-Qvalue pairs.

📈 Training algorithm.

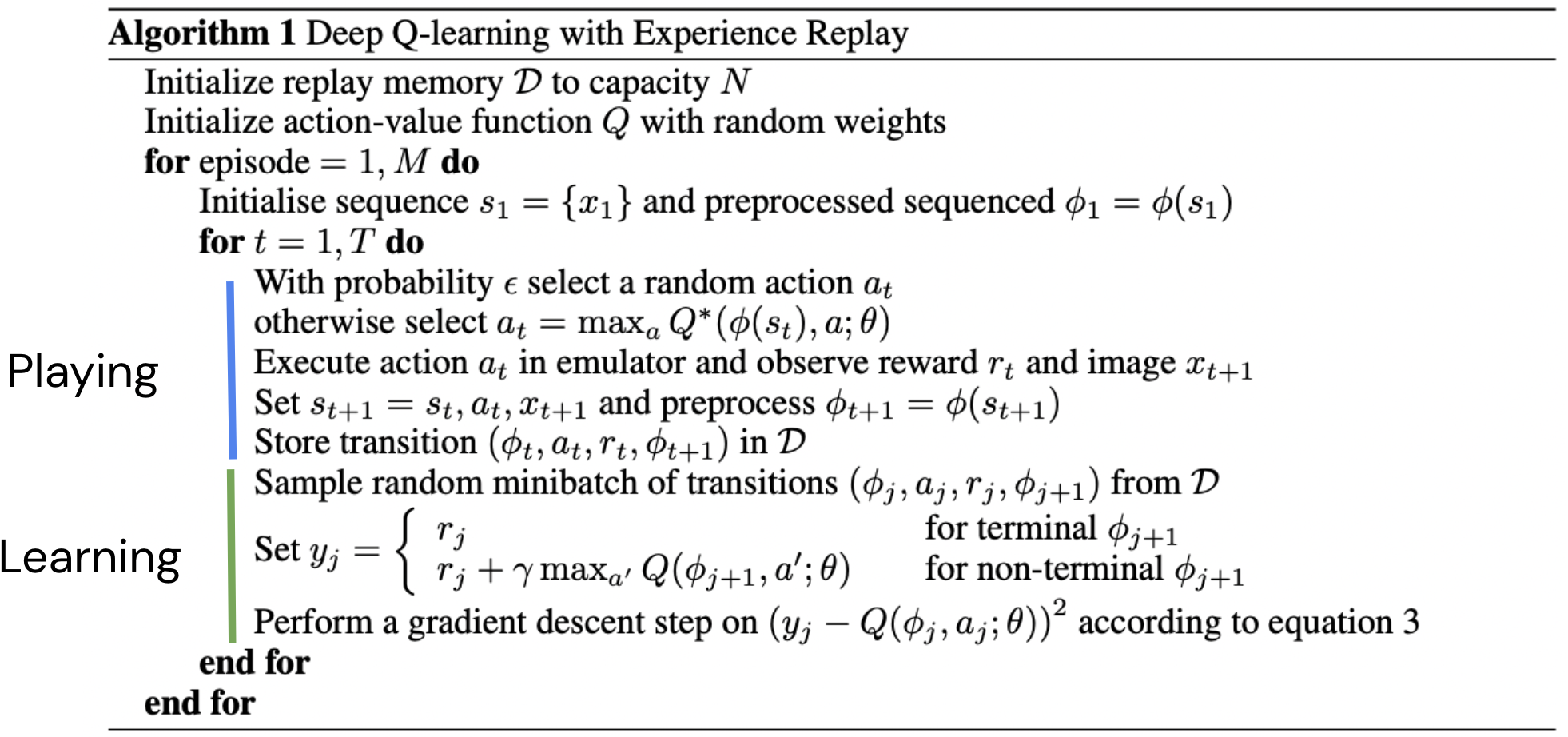

The training is conceptually split into 2 parts: Playing and Learning.

During the playing phase we select and perform an action and store the resulting transition in our replay buffer for future use. In the Learning part we randomly sample a minibatch from our buffer and perform a gradient descent step. The random sampling is important to smooth the training distribution and overcome the strong correlation between consecutive transitions in a game (this technique is referred to as experience replay).

Model architecture.

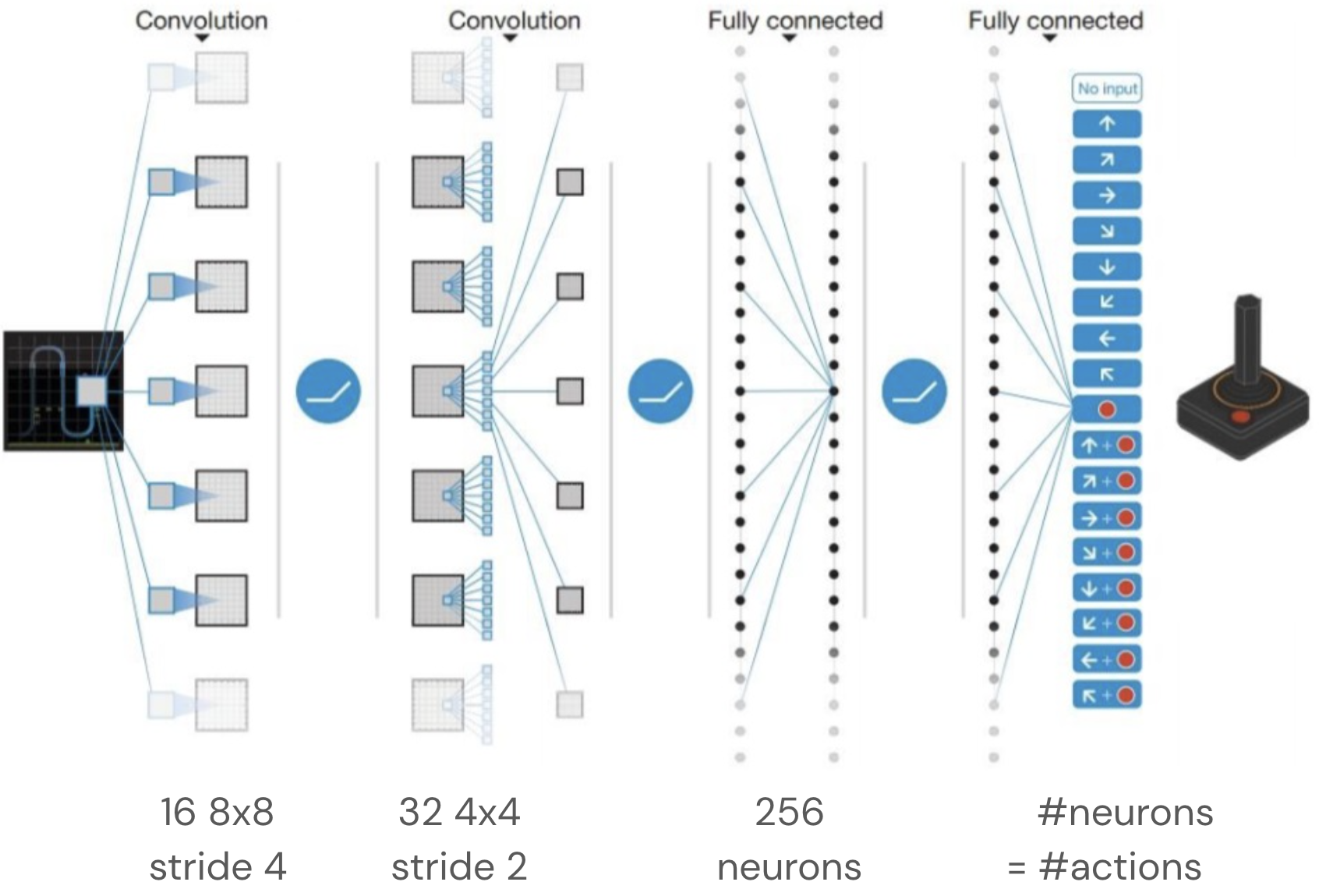

Judged by todays standard, the model is astonishingly simple.

It takes an 84 × 84 × 4 image produced by a preprocessing function (downsampling, cropping, gray-scaling, stacking 4 images) and feeds it through two convolutional and two linear layers with ReLU’s inbetween. The number of output neurons corresponds to the number of valid actions, which varies between 4 and 18 for the evaluated corpus of games (Figure adapted from here).

Results

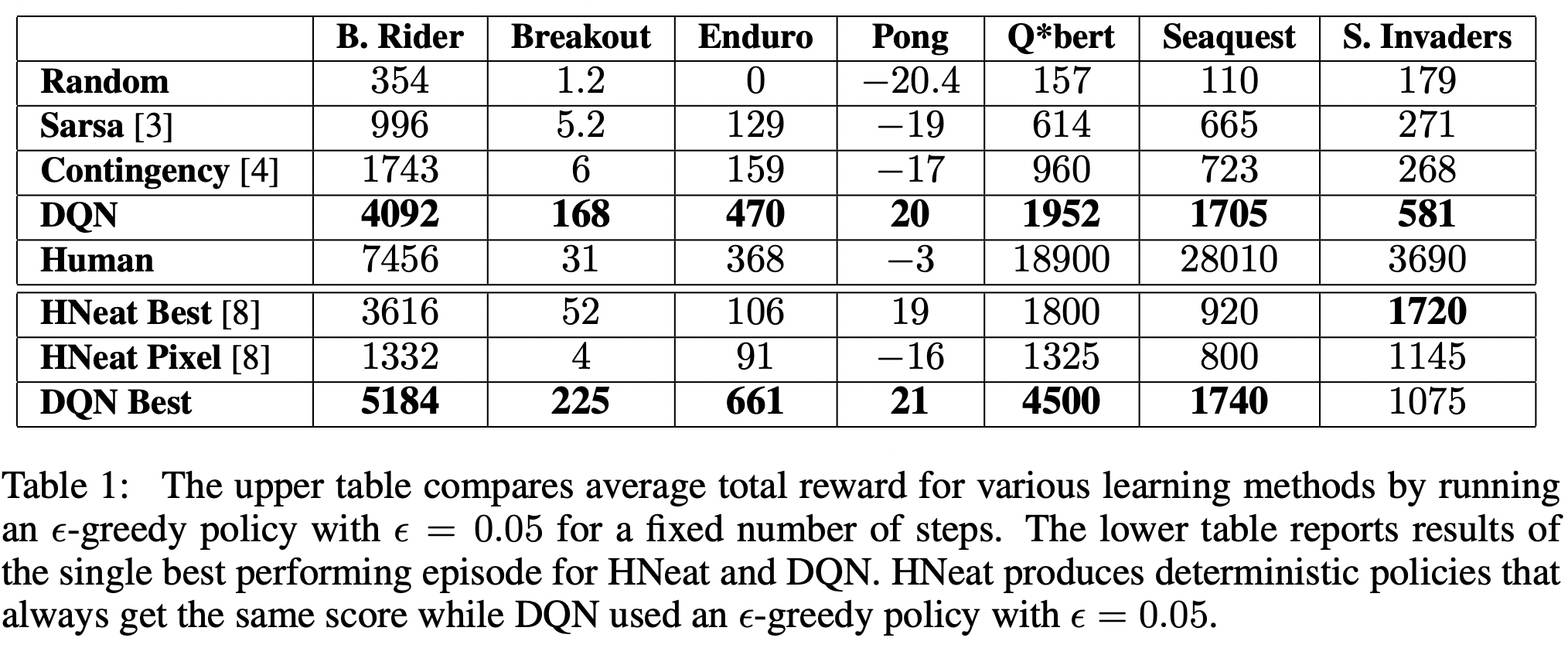

The model outperforms SOTA methods on most games and can even beat human players in three of them.

This is especially impressive when considering that previous SOTA approaches used highly game specific information to make predicitions while the Deep Q Network learns from nothing but the pixel input.

Notabily humans are superior to the model in games that have a longer playing time. Intuitively this makes sense, given that the core task of the network - to approximate the Q function that returns the estimated reward until the end of the game - becomes more difficult with increasing game length.

🔮 Key Takeaways

😍 DL in RL! The paper demonstrated that it is possible to combine deep learning with reinforcement learning and thereby opened the door for a lot of research and applications.

🧠 Simplicity. Even small models can produce impressive results. Not every use-case requries huge models with billions of parameters.

📣 Stay in touch

That’s it for this week. We hope you enjoyed reading this post. 😊 To stay updated about our activities, make sure you give us a follow on LinkedIn and Subscribe to our Newsletter. Any questions or ideas for talks, collaboration, etc.? Drop us a message at hello@aitu.group.